Compositionstructure

Thehigh-speedbuffermemoryisthefirst-levelmemoryexistingbetweenthemainmemoryandtheCPU.Itiscomposedofstaticmemorychips(SRAM).Thecapacityisrelativelysmallbutthespeedishigherthanthatofthemainmemory.Much,closetothespeedoftheCPU.

Itismainlycomposedofthreeparts:

Cachememorybody:storestheinstructionsanddatablockstransferredfromthemainmemory.

Addressconversioncomponent:Createadirectorytabletorealizetheconversionfrommainmemoryaddresstocacheaddress.

Replacecomponents:Whenthecacheisfull,replacethedatablockaccordingtoacertainstrategyandmodifytheaddresstranslationcomponent.

Workingprinciple

Cachememoryisusuallycomposedofhigh-speedmemory,Lenovomemory,replacementlogiccircuitandcorrespondingcontrolcircuit.Inacomputersystemwithacachememory,theaddressofthecentralprocessortoaccessthemainmemoryisdividedintothreefields:rownumber,columnnumber,andaddressinthegroup.Therefore,themainmemoryislogicallydividedintoseveralrows;eachrowisdividedintoseveralmemorycellgroups;eachgroupcontainsseveralordozensofwords.High-speedmemoryisalsodividedintorowsandcolumnsofmemorycellgroupsaccordingly.Bothhavethesamenumberofcolumnsandthesamegroupsize,butthenumberofrowsinthehigh-speedmemoryismuchsmallerthanthatinthemainmemory.

Lenovomemoryisusedforaddressassociation.Ithasstorageunitswiththesamenumberofrowsandcolumnsashigh-speedmemory.Whenamemorycellgroupinacertaincolumnandrowofthemainmemoryistransferredtoanemptymemorycellgroupinthesamecolumnofthehigh-speedmemory,thememorycellcorrespondingtotheLenovomemoryrecordstherownumberofthetransferredmemorycellgroupinthemainmemory.

Whenthecentralprocessingunitaccessesthemainmemory,thehardwarefirstautomaticallydecodesthecolumnnumberfieldoftheaccessaddress,soastocomparealltherownumbersoftheLenovomemorywiththerowofthemainmemoryaddress.Thenumberfieldiscompared:iftherearethesame,itindicatesthatthemainmemoryunittobeaccessedisalreadyinthehigh-speedmemory,whichiscalledahit.Thehardwaremapstheaddressofthemainmemorytotheaddressofthehigh-speedmemoryandexecutestheaccessoperation;Iftheyarenotthesame,itmeansthattheunitisnotinthehigh-speedmemory,whichiscalledoff-target.Thehardwarewillperformtheoperationofaccessingthemainmemoryandautomaticallytransferthemainmemorycellgroupwheretheunitislocatedintotheemptymemorycellgroupinthesamecolumnofthehigh-speedmemory.Atthesametime,therownumberofthegroupinthemainmemoryisstoredintheunitofthecorrespondinglocationoftheLenovomemory.

Whenanoff-targetoccursandthereisnoemptypositioninthecorrespondingcolumnofthehigh-speedmemory,acertaingroupinthecolumniseliminatedtomakeroomforthenewlytransferredgroup,whichiscalledreplacement.Therulesfordeterminingreplacementarecalledreplacementalgorithms.Commonlyusedreplacementalgorithmsinclude:leastrecentlyusedalgorithm(LRU),first-infirst-out(FIFO),randommethod(RAND),andsoon.Thereplacementlogiccircuitperformsthisfunction.Inaddition,whenperformingawriteoperationtothemainmemory,inordertomaintaintheconsistencyofthecontentsofthemainmemoryandthehigh-speedmemory,hitsandmissesmustbehandledseparately.

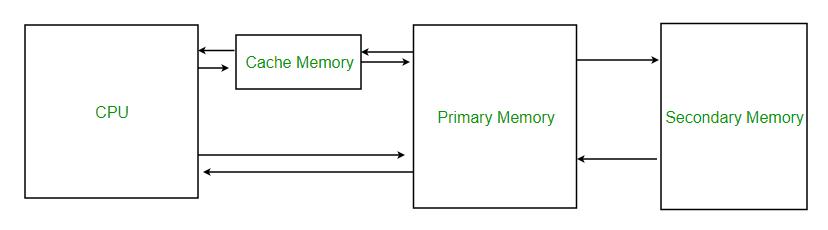

Storagehierarchy

Themain-auxiliarystoragestoragehierarchyisduetothemainmemorycapacityofthecomputerrelativetothecapacityrequiredbytheprogrammerItisalwaystoosmall.Thetransferofprogramsanddatafromtheauxiliarystoragetothemainmemoryisarrangedbytheprogrammer.Theprogrammermustspendalotofenergyandtimetodividethelargeprogramintoblocksinadvance,anddeterminetheblocksintheauxiliarystorage.Thelocationandtheaddressloadedintothemainmemory,andhowandwheneachblockiscalledinandoutwhentheprogramisrunning.Therefore,thereisaproblemofmemoryspaceallocation.Theformationanddevelopmentoftheoperatingsystemallowsprogrammerstogetridoftheaddresspositioningbetweenthemainandauxiliarymemoryasmuchaspossible,andatthesametimeformsthe"auxiliaryhardware"thatsupportsthesefunctions.Throughthecombinationofsoftwareandhardware,themainmemoryandauxiliarymemoryareunifiedintoAwhole,asshowninthepicture.Atthistime,themainstorageandauxiliarystorageformastoragehierarchy,thatis,thestoragesystem.Onthewhole,itsspeedisclosetothespeedofmainstorage,itscapacityisclosetothecapacityofauxiliarystorage,andtheaveragepriceperpersonisalsoclosetotheaveragepriceofcheapandslowauxiliarystorage.Thecontinuousdevelopmentandimprovementofthissystemhasgraduallyformedthenowwidelyusedvirtualstoragesystem.Inthesystem,theapplicationprogrammercanaddresstheentireprogramuniformlywiththemachineinstructionaddresscode,justastheprogrammerhasallthevirtualmemoryspacecorrespondingtothewidthoftheaddresscode.Thisspacecanbemuchlargerthantheactualspaceofthemainmemory,sothattheentireprogramcanbesaved.Thisinstructionaddresscodeiscalledvirtualaddress(virtualmemoryaddress,virtualaddress)orlogicaladdress,anditscorrespondingstoragecapacityiscalledvirtualmemorycapacityorvirtualmemoryspace;andtheaddressofactualmainmemoryiscalledphysicaladdress,real(Storage)address,anditscorrespondingstoragecapacityiscalledmainstoragecapacity,realstoragecapacityorreal(main)storagespace

main-auxiliarystoragestoragehierarchy

CACHE-mainstoragestorageHierarchy

Whenthevirtualaddressisusedtoaccessthemainmemory,themachineautomaticallyconvertsittotherealaddressofthemainmemorythroughauxiliarysoftwareandhardware.Checkwhetherthecontentoftheunitcorrespondingtothisaddresshasbeenloadedintothemainmemory.Ifitisinthemainmemory,accessit.Ifitisnotinthemainmemory,transfertheprogramanddatawhereitisfromtheauxiliarymemorytothemainmemoryviatheauxiliarysoftwareandhardware.,Andthenvisit.Theseoperationsdonothavetobearrangedbytheprogrammer,thatistosay,itistransparenttotheapplicationprogrammer.Themain-auxiliarystoragelevelsolvesthecontradictionbetweenthelarge-capacityrequirementsofthememoryandthelow-cost.Intermsofspeed,thecomputer'smainmemoryandCPUhavekeptagapofaboutanorderofmagnitude.ObviouslythisgaplimitsthepotentialoftheCPUspeed.Inordertobridgethisgap,asinglememoryusingonlyoneprocessisnotfeasible,andfurtherresearchmustbedonefromthestructureandorganizationofthecomputersystem.Settingupahigh-speedbuffermemory(Cache)isanimportantmethodtosolvetheaccessspeed.Ahigh-speedcachememoryissetbetweentheCPUandthemainmemorytoformacache(Cache)-mainmemorylevel,andtheCacheisrequiredtobeabletokeepupwiththerequirementsoftheCPUintermsofspeed.TheaddressmappingandschedulingbetweenCache-mainmemoryabsorbsthetechnologyofthemain-auxiliarymemorystoragelayerthatappearedearlierthanit.Thedifferenceisthatbecauseofitshighspeedrequirements,itisnotrealizedbythecombinationofsoftwareandhardwarebutbyhardware.asthepictureshows.

Addressmappingandconversion

Addressmappingreferstothecorrespondencebetweentheaddressofacertaindatainthememoryandtheaddressinthebuffer.Thefollowingdescribesthreeaddressmappingmethods.

1.Fullyassociativemethod

Addressmappingrule:AnypieceofmainmemorycanbemappedtoanypieceofCache

(1)MainStorageandcachearedividedintodatablocksofthesamesize.

(2)Acertaindatablockofthemainmemorycanbeloadedintoanyspaceofthecache.IfthenumberofCacheblocksisCbandthenumberofmainmemoryblocksisMb,thenthereareCb×Mbkindsofmappingrelationships.

Thedirectorytableisstoredintherelevant(associated)memory,whichincludesthreeparts:theblockaddressofthedatablockinthemainmemory,theblockaddressafterbeingstoredinthecache,andtheeffectivebit(alsocalledtheloadbit).Sinceitisafullyassociativemethod,thecapacityofthedirectorytableshouldbethesameasthenumberofblocksinthecache.

Advantages:Thehitrateisrelativelyhigh,andtheutilizationofCachestoragespaceishigh.

Disadvantages:Whenaccessingtherelatedmemory,itmustbecomparedwiththeentirecontenteverytime,thespeedislow,thecostishigh,andthereforetherearefewapplications.

2.Directassociationmethod

Addressmappingrule:AblockinthemainmemorycanonlybemappedtoaspecificblockintheCache.

(1)Themainmemoryandcachearedividedintodatablocksofthesamesize.

(2)Themainmemorycapacityshouldbeanintegermultipleofthecachecapacity.Themainmemoryspaceisdividedintoareasaccordingtothecachecapacity,andthenumberofblocksineachareaofthemainmemoryisequaltothetotalnumberofblocksinthecache.

(3)Whenablockofacertainareainthemainmemoryisstoredinthecache,itcanonlybestoredinthesamelocationinthecachewiththesameblocknumber.

Datablockswiththesameblocknumberineachareaofthemainmemorycanbetransferredtotheaddresswiththesameblocknumberinthecache,butonlyoneblockcanbestoredinthecacheatthesametime.Sincethemainandcacheblocknumbersarethesame,onlytheareacodeofthetransferredblockcanberecordedduringdirectoryregistration.Thetwofieldsofthemainandcacheblocknumbersandtheaddressintheblockareexactlythesame.Thedirectorytableisstoredinhigh-speedandsmall-capacitymemory,whichincludestwoparts:theareanumberofthedatablockinthemainmemoryandtheeffectivebit.Thecapacityofthedirectorytableisthesameasthenumberofcachedblocks.

Advantages:Theaddressmappingmethodissimple.Whenaccessingdata,youonlyneedtocheckwhethertheareacodeisequal,soyoucangetafasteraccessspeed,andthehardwaredeviceissimple.

Disadvantages:replacementoperationsarefrequentandthehitrateisrelativelylow.

3.Group-associativemappingmethod

Thegroup-associativemappingrule:

(1)MainmemoryandCachearedividedintoblocksofthesamesize.

(2)MainmemoryandCachearedividedintogroupsofthesamesize.

(3)Themainmemorycapacityisanintegermultipleofthecachecapacity.Themainmemoryspaceisdividedintoareasaccordingtothesizeofthebufferarea.Thenumberofgroupsineachareaofthemainmemoryisthesameasthenumberofgroupsinthecache.

(4)Whenthedatainthemainmemoryisloadedintothecache,thegroupnumbersofthemainmemoryandthecacheshouldbeequal,thatis,ablockineachareacanonlybestoredinthespaceofthesamegroupnumberinthecache,butEachblockaddressinthegroupcanbestoredarbitrarily,thatis,thedirectmappingmethodisadoptedfromthemainmemorygrouptotheCachegroup;thefullassociativemappingmethodisadoptedwithinthetwocorrespondinggroups.

Theconversionbetweenthemainmemoryaddressandthecacheaddresshastwoparts.Thegroupaddressisaccessedaccordingtothedirectmappingmethod,andtheblockaddressisaccessedaccordingtothecontent.Thegroup-associatedaddressconversionunitisalsoimplementedbyusingrelatedmemories.

Advantages:Thecollisionprobabilityoftheblockisrelativelylow,theutilizationrateoftheblockisgreatlyimproved,andtheblockfailurerateissignificantlyreduced.

Disadvantages:Thedifficultyandcostofimplementationarehigherthanthatofdirectmapping.

Replacementstrategy

1.Accordingtothelawofprogramlocality,itcanbeknownthattheprogramalwaysusesthoseinstructionsanddatathathavebeenusedrecentlywhenitisrunning.Thisprovidesatheoreticalbasisforthereplacementstrategy.Basedonvariousfactorssuchashitrate,difficultyofrealizationandspeed,thereplacementstrategycanincluderandommethod,first-infirst-outmethod,leastrecentlyusedmethod,etc.

(1).Randommethod(RANDmethod)

Therandommethodistorandomlydeterminethereplacementmemoryblock.Setuparandomnumbergenerator,anddeterminethereplacementblockbasedontherandomnumbergenerated.Thismethodissimpleandeasytoimplement,butthehitrateisrelativelylow.

(2).First-in-first-outmethod(FIFOmethod)

Thefirst-in-first-outmethodistoselecttheblockthatiscalledfirstforreplacement.Whentheblockthatisfirsttransferredandhitmultipletimesislikelytobereplacedfirst,itdoesnotconformtothelocalitylaw.Thehitrateofthismethodisbetterthantherandommethod,butitdoesnotmeettherequirements.Thefirst-infirst-outmethodiseasytoimplement,

(3).Theleastrecentlyusedmethod(LRUmethod)

TheLRUmethodisbasedontheusageofeachblock,alwayschoosetheleastrecentlyusedmethodTheblockisreplaced.Thismethodbetterreflectsthelawofprogramlocality.TherearemanywaystoimplementtheLRUstrategy.

2Inamulti-bodyparallelstoragesystem,becausetheI/OdevicerequestsahigherlevelofmemoryfromthemainmemorythantheCPUfetches,thiscausesthephenomenonthattheCPUwaitsfortheI/Odevicetofetchthememory,causingtheCPUWaitingforaperiodoftime,orevenwaitingforafewmainmemorycycles,reducestheefficiencyoftheCPU.InordertoavoidtheCPUandtheI/Odevicefromcompetingformemoryaccess,afirst-levelcachecanbeaddedbetweentheCPUandthemainmemory.Inthisway,themainmemorycansendtheinformationthattheCPUneedstothecacheinadvance.OncethemainmemoryandtheI/OdeviceexistDuringtheexchange,theCPUcandirectlyreadtherequiredinformationfromthecache,withouthavingtowaittoaffectefficiency.

3Thealgorithmscurrentlyproposedcanbedividedintothefollowingthreecategories(thefirstcategoryisthekeytomaster):

(1)Traditionalreplacementalgorithmanditsdirectevolution,itsrepresentativealgorithmThereare:①LRU(LeastRecentlyUsed)algorithm:replacetheleastrecentlyusedcontentoutofCache;②LFU(LeaseFrequentlyUsed)algorithm:replacetheleastaccessedcontentoutofCache;③IfallcontentinCacheiscachedonthesamedayIfyes,replacethelargestdocumentoutofCache,otherwisereplaceitaccordingtotheLRUalgorithm.④FIFO(FirstInFirstOut):Followthefirst-in-first-outprinciple.IfthecurrentCacheisfull,replacetheonethatenteredtheCacheearliest.

(2)Thereplacementalgorithmbasedonthekeyfeaturesofthecachecontent,itsrepresentativealgorithmsare:①Sizereplacementalgorithm:replacethelargestcontentoutofCache②LRU-MINreplacementalgorithm:thisalgorithmstrivestomakethenumberofdocumentsreplacedleast.SupposethesizeofthedocumenttobecachedisS,andreplacethedocumentwithasizeofatleastSintheCacheaccordingtotheLRUalgorithm;ifthereisnoobjectwithasizeofatleastS,followtheLRUalgorithmfromthedocumentwithasizeofatleastS/2Replace;③LRU-Thresholdreplacementalgorithm:SameastheLRUalgorithm,exceptthatdocumentswhosesizeexceedsacertainthresholdcannotbecached;④LowestLacencyFirstreplacementalgorithm:ReplacethedocumentswiththeleastaccessdelayoutofCache.

(3)Cost-basedreplacementalgorithm,thistypeofalgorithmusesacostfunctiontoevaluatetheobjectsintheCache,andfinallydeterminesthereplacementobjectbasedonthevalueofthecost.Itsrepresentativealgorithmsare:①Hybridalgorithm:thealgorithmassignsautilityfunctiontoeachobjectintheCache,andreplacestheobjectwiththeleastutilityoutoftheCache;②LowestRelativeValuealgorithm:replacestheobjectwiththelowestutilityvalueoutoftheCache;③LeastNormalizedCostReplacement(LCNR)algorithm:Thisalgorithmusesaninferencefunctionaboutdocumentaccessfrequency,transmissiontime,andsizetodeterminethereplacementdocument;④Bolotetal.proposedaweightedinferencefunctionbasedondocumenttransmissiontimecost,size,andlastaccesstime.Determinethedocumentreplacement;⑤Size-AdjustLRU(SLRU)algorithm:sortthecachedobjectsaccordingtotheratioofcosttosize,andselecttheobjectwiththesmallestratioforreplacement.

Functionintroduction

Duringthedevelopmentofcomputertechnology,theaccessspeedofthemainmemoryhasalwaysbeenmuchslowerthantheoperationspeedofthecentralprocessingunit,sothatthehigh-speedprocessingcapacityofthecentralprocessingunitcannotbefullyutilized.,Theworkefficiencyoftheentirecomputersystemisaffected.Therearemanymethodstoalleviatethecontradictionbetweenthespeedmismatchbetweenthecentralprocessingunitandthemainmemory,suchastheuseofmultiplegeneral-purposeregisters,multi-bankinterleaving,etc.Theuseofcachememoryatthestoragelevelisalsooneofthecommonlyusedmethods.Manylargeandmedium-sizedcomputersaswellassomerecentminicomputersandmicrocomputersalsousehigh-speedbuffermemory.

Thecapacityofthecachememoryisgenerallyonlyafewpercentofthemainmemory,butitsaccessspeedcanmatchthecentralprocessingunit.Accordingtotheprincipleofprogramlocality,thereisahighprobabilitythatthoseunitsadjacenttoacertainunitofthemainmemorythatarebeingusedwillbeused.Therefore,whenthecentralprocessingunitaccessesacertainunitofthemainmemory,thecomputerhardwareautomaticallytransfersthecontentsofthegroupofunitsincludingtheunitintothecachememory,andthemainmemoryunitthatthecentralprocessingunitwillaccessisverylikelyJustinthegroupofcellsthatwerejusttransferredtothecachememory.Thus,thecentralprocessingunitcandirectlyaccessthecachememory.Intheentireprocessingprocess,ifmostoftheoperationsofthecentralprocessingunittoaccessthemainmemorycanbereplacedbyaccesstothecachememory,theprocessingspeedofthecomputersystemcanbesignificantlyimproved.

Readhitrate

WhentheCPUfindsusefuldataintheCache,itiscalledahit.WhenthereisnodataneededbytheCPUintheCache(thisiscalledamiss),TheCPUonlyaccessesthememory.Intheory,inaCPUwithlevel2Cache,thehitrateforreadingL1Cacheis80%.Thatistosay,theusefuldatafoundbytheCPUfromL1Cacheaccountsfor80%ofthetotaldata,andtheremaining20%isreadfromL2Cache.Duetotheinabilitytoaccuratelypredictthedatatobeexecuted,thehitrateofreadingL2isalsoabout80%(readingusefuldatafromL2accountsfor16%ofthetotaldata).Thentheremainingdatahastobecalledfrommemory,butthisisalreadyafairlysmallpercentage.Insomehigh-endCPUs,weoftenhearL3Cache,whichisdesignedfordatathatmissesafterreadingL2Cache—akindofCache.InaCPUwithL3Cache,onlyabout5%ofthedataneedstobecalledfrommemory.ThisfurtherimprovestheefficiencyoftheCPU.

InordertoensureahigherhitrateduringCPUaccess,thecontentintheCacheshouldbereplacedaccordingtoacertainalgorithm.Amorecommonlyusedalgorithmisthe"leastrecentlyusedalgorithm"(LRUalgorithm),whicheliminatesthelinesthathavebeenleastvisitedinthemostrecentperiodoftime.Therefore,itisnecessarytosetacounterforeachrow.TheLRUalgorithmclearsthecounterofthehitrowandadds1tothecountersoftheotherrows.Whenreplacementisneeded,thedatarowwiththelargestrowcountercountiseliminated.Thisisanefficientandscientificalgorithm.ItscounterclearingprocesscaneliminatesomedatathatisnolongerneededafterfrequentcallsoutoftheCacheandimprovetheutilizationoftheCache.

TheimpactoftheCachereplacementalgorithmonthehitrate.WhenanewmainmemoryblockneedstobetransferredintotheCacheanditsavailablespaceisfullagain,thedataintheCacheneedstobereplaced,whichcreatesareplacementstrategy(algorithm)problem.Accordingtothelawofprogramlocality,itcanbeknownthattheprogramalwaysusestheinstructionsanddatathathavebeenusedrecentlywhentheprogramisrunning.Thisprovidesatheoreticalbasisforthereplacementstrategy.ThegoalofthereplacementalgorithmistomaketheCachegetthehighesthitrate.Cachereplacementalgorithmisanimportantfactorthataffectstheperformanceoftheproxycachesystem.AgoodCachereplacementalgorithmcanproduceahigherhitrate.Thecommonlyusedalgorithmsareasfollows:(1)Randommethod(RANDmethod)Therandomreplacementalgorithmistousearandomnumbergeneratortogenerateablocknumbertobereplaced,andreplacetheblock.Thisalgorithmissimpleandeasytoimplement.Moreover,itdoesnotconsiderthepast,presentandfutureuseoftheCacheblock,butdoesnotusethe"historicalinformation"usedbytheuppermemory,anddoesnotfollowtheprincipleoflocalityofmemoryaccess,sothehitrateoftheCachecannotbeimproved,andthehitrateislow.

(2)First-in-first-outmethod(FIFOmethod)First-in-first-out(First-In-First-Out,FIFO)algorithm.ItistoreplacetheinformationblockthatfirstenterstheCache.TheFIFOalgorithmdeterminestheorderofeliminationaccordingtothesequenceoftransfersintotheCache,andselectstheearliestblocktransferredintotheCacheforreplacement.Itdoesnotneedtorecordtheusageofeachblock,whichisrelativelyeasytoimplementandhaslowsystemoverhead.ItsdisadvantageisthatsomeProgramblocksthatneedtobeusedfrequently(suchascyclicprograms)arealsoreplacedastheearliestblockstoentertheCache,andtheyarenotbasedontheprincipleoflocalityofmemoryaccess,sothehitrateoftheCachecannotbeimproved.Thisisbecausetheearliestinformationmaybeusedinthefuture,orfrequentlyused,suchasacycleprogram.Thismethodissimpleandconvenient.Itusesthe"historicalinformation"ofthemainmemory,butitcannotbesaidthatthefirstentryisnotoftenused.Itsdisadvantageisthatitcannotcorrectlyreflecttheprincipleofprogramlocality,thehitrateisnothigh,andanabnormalitymayoccur.Phenomenon.

(3)LeastRecentlyUsed(LRU)algorithm.ThismethodistoreplacetheleastrecentlyusedinformationblockintheCache.Thisalgorithmisbetterthanthefirst-infirst-outalgorithm.However,thismethoddoesnotguaranteethatitwillnotbeusedfrequentlyinthepastandwillnotbeusedinthefuture.TheLRUmethodalwaysselectstheleastrecentlyusedblocktobereplacedbasedontheusageofeachblock.Althoughthismethodbetterreflectsthelawofprogramlocality,thisreplacementmethodneedstorecordtheusageofeachblockintheCacheatanytime,inordertodeterminewhichblockistheleastrecentlyusedblock.TheLRUalgorithmisrelativelyreasonable,butitismorecomplicatedtoimplement,andthesystemoverheadisrelativelylarge.Itisusuallynecessarytosetupahardwareorsoftwaremodulecalledacounterforeachblocktorecorditsuse.