Content

Performance testing plays an important role in software quality assurance, and it includes a rich variety of test content. The China Software Evaluation Center summarizes the performance test into three aspects: the test of the performance of the application on the client, the test of the performance of the application on the network and the test of the performance of the application on the server. Under normal circumstances, an effective and reasonable combination of the three aspects can achieve a comprehensive analysis of system performance and prediction of bottlenecks.

Client

The purpose of the application performance test on the client is to examine the performance of the client application, and the entrance of the test is the client. It mainly includes concurrent performance testing, fatigue strength testing, large data volume testing and speed testing, among which concurrent performance testing is the focus.

Concurrent performance testing is the key point

The process of concurrent performance testing is a process of load testing and stress testing, that is, gradually increasing the load until the system bottleneck or unacceptable performance points. The process of determining the concurrent performance of the system by comprehensively analyzing transaction execution indicators and resource monitoring indicators. Load testing (LoadTesting) is to determine the performance of the system under various workloads. The goal is to test the corresponding output items of the system components when the load gradually increases, such as throughput, response time, CPU load, memory usage, etc. to determine the system Performance. Load testing is a process of analyzing software applications and supporting structures and simulating the use of real environments to determine acceptable performance. Stress Testing is a test to obtain the maximum service level that the system can provide by determining the bottleneck or unacceptable performance points of a system.

The purpose of concurrent performance testing is mainly reflected in three aspects: based on real business, select representative and key business operation design test cases to evaluate the current performance of the system; when expanding the application When the function of the program or a new application is about to be deployed, load testing will help determine whether the system can still handle the expected user load to predict the future performance of the system; by simulating hundreds of users, repeating and running the test, Can confirm the performance bottleneck and optimize and adjust the application, the purpose is to find the bottleneck problem.

When an enterprise organizes its own strength or entrusts a software company to develop an application system on its behalf, especially when it is actually used in a production environment in the future, users often have questions about whether this system can withstand a large number of applications. Concurrent users access at the same time? This type of problem is most common in systems such as online transaction processing (OLTP) database applications, Web browsing, and video-on-demand. The solution of this kind of problem requires the help of scientific software testing methods and advanced testing tools.

Illustration: Telecom billing software

As we all know, around the 20th of each month is the peak period for local calls, and thousands of toll outlets in the city are activated at the same time. The charging process is generally divided into two steps. First, according to the user's phone number, the user needs to inquire about the expenses incurred in the current month, and then collect the cash and modify the user to the paid state. A user seems to have two simple steps, but when hundreds of thousands of terminals perform such operations at the same time, the situation is very different. So many transactions happen at the same time, which is very important for the application itself, the operating system, and the central database server. The endurance of middleware servers and network equipment is a severe test. It is impossible for decision makers to consider the endurance of the system after a problem occurs, and foresee the concurrent endurance of the software. This is a problem that should be solved in the software testing stage.

Most companies and enterprises need to support hundreds of users, various application environments and complex products assembled from components provided by different suppliers, unpredictable user loads and increasingly complex applications The program has caused the company to worry about problems such as poor delivery performance, slow user response, and system failure. The result is a loss of company revenue.

How to simulate the actual situation? Find several computers and the same number of operators to operate at the same time, and then use a stopwatch to record the reaction time? Such hand-workshop-style testing methods are impractical and cannot capture the internal changes of the program. This requires the assistance of stress testing tools.

The basic strategy of testing is automatic load testing. It tests the application by simulating hundreds or thousands of virtual users on one or several PCs to execute the business at the same time. A transaction processing time, middleware server peak data, database status, etc. Repeatable and realistic testing can thoroughly measure the scalability and performance of the application, identify the problem and optimize system performance. Knowing the endurance of the system in advance provides a strong basis for the end user to plan the configuration of the entire operating environment.

Preparation work before concurrent performance test

Test environment: Configuring the test environment is an important stage of test implementation. The suitability of the test environment will seriously affect the authenticity of the test results and Correctness. The test environment includes the hardware environment and the software environment. The hardware environment refers to the environment constituted by the servers, clients, network connection devices, and auxiliary hardware devices such as printers/scanners necessary for the test; the software environment refers to the operating system and database when the software under test is running And other application software.

A well-prepared test environment has three advantages: a stable and repeatable test environment that can ensure the correct test results; ensure that the technical requirements for test execution are met; ensure that the correct and repeatable And easy-to-understand test results.

Test tool: Concurrent performance test is a black box test performed on the client side. Generally, it does not use manual methods, but uses tools to conduct automated methods. There are many mature concurrent performance testing tools, and the selection is mainly based on testing requirements and performance-price ratio. Well-known concurrent performance testing tools include QALoad, LoadRunner, BenchmarkFactory and Webstress. These test tools are all automated load test tools. Through repeatable and real tests, they can thoroughly measure the scalability and performance of the application. They can perform test tasks automatically throughout the development life cycle, across multiple platforms, and can be simulated. Hundreds or thousands of users concurrently execute key services to complete the test of the application.

Test data: In the initial test environment, you need to enter some appropriate test data. The purpose is to identify the state of the data and verify the test case used for testing. The test case is debugged before the formal test starts. Minimize errors at the beginning of the formal test. When the test progresses to the key process link, it is very necessary to back up the data state. Manufacturing initial data means storing the appropriate data and restoring it when needed. The initial data provides a baseline to evaluate the results of test execution.

When the test is formally executed, business test data is also required, such as testing concurrent query business, then the corresponding database and table are required to have a considerable amount of data and the type of data should be able to cover all businesses.

Imitate the real environment test, some software, especially the commercial software for the general public, often need to examine the performance in the real environment when testing. For example, when testing the scanning speed of anti-virus software, the proportion of different types of files placed on the hard disk should be as close as possible to the real environment, so that the tested data has practical meaning.

Types and indicators of concurrent performance testing

The types of concurrent performance testing depend on the objects monitored by the concurrent performance testing tool. Take the QALoad automated load testing tool as an example. The software provides DB2, DCOM, ODBC, ORACLE, NETLoad, Corba, QARun, SAP, SQLServer, Sybase, Telnet, TUXEDO, UNIFACE, WinSock, WWW, JavaScript and other different monitoring objects for various test targets, and supports Windows and UNIX tests. environment.

The most important thing is still the flexible application of monitoring objects in the test process. For example, the three-tier structure of the operating mode is widely used, and the concurrent performance test of middleware is mentioned as a problem on the agenda. Many systems All use domestic middleware, select JavaScript monitoring objects, and manually write scripts to achieve the purpose of testing.

Using automated load testing tools to perform concurrent performance testing, the basic testing process followed are: test requirements and test content, test case formulation, test environment preparation, test script recording, writing and debugging, script distribution, Playback configuration and loading strategy, test execution tracking, result analysis and location of problems, test report and test evaluation.

The objects of concurrent performance testing and monitoring are different, and the main indicators of the test are also different. The main test indicators include transaction processing performance indicators and UNIX resource monitoring. Among them, transaction processing performance indicators include transaction results, number of transactions per minute, transaction response time (Min: minimum server response time; Mean: average server response time; Max: maximum server response time; StdDev: transaction server response deviation, value The greater the value, the greater the deviation; Median: median response time; 90%: server response time for 90% transaction processing), the number of virtual concurrent users.

Application example: "Xinhua News Agency Multimedia Database V1.0" Performance Test

China Software Testing Center (CSTC) according to the "Multimedia Database (Phase I) Performance" proposed by Xinhua News Agency Technology Bureau "Test Requirements" and GB/T17544 "Package Quality Requirements and Testing" national standards, using industry standard load testing tools to perform performance tests on the "Xinhua News Agency Multimedia Database V1.0" used by Xinhua News Agency.

The purpose of the performance test is to simulate multiple users concurrently accessing the Xinhua News Agency multimedia database, perform key retrieval services, and analyze system performance.

The focus of performance testing is to conduct concurrent testing and fatigue testing for the main retrieval business that has a large concurrent pressure load. The system adopts the B/S operation mode. Concurrent testing is designed to perform concurrent test cases in the Chinese library, English library, and image library in a specific time period, such as single search terms, multiple search terms, variable search formulas, and mixed search services. The fatigue test case is 200 concurrent users in the Chinese database, and a single search term search with a test period of about 8 hours. While conducting concurrency and fatigue tests, the monitored test indicators include transaction processing performance and UNIX (Linux), Oracle, Apache resources, etc.

Test conclusion: In the Xinhua News Agency computer room test environment and intranet test environment, under the condition of 100M bandwidth, for each specified concurrent test case, the system can withstand the load pressure of 200 concurrent users, and the maximum transaction The number/minute reaches 78.73, the operation is basically stable, but as the load pressure increases, the system performance is attenuated.

The system can withstand the fatigue stress of 200 concurrent users for approximately 8 hours in a cycle, and can basically operate stably.

Through the monitoring of system UNIX (Linux), Oracle and Apache resources, system resources can meet the above-mentioned concurrency and fatigue performance requirements, and system hardware resources still have a lot of room for utilization.

When the number of concurrent users exceeds 200, HTTP500, connect, and timeout errors are monitored, and the Web server reports a memory overflow error. The system should further improve the performance to support a larger number of concurrent users.

It is recommended to further optimize the software system, make full use of hardware resources, and shorten the transaction response time.

Fatigue strength and large data volume test

Fatigue test is to use the maximum number of concurrent users that the system can support under stable operation of the system, continue to execute business for a period of time, and comprehensively analyze transaction execution indicators And resource monitoring indicators to determine the process of the system to handle the maximum workload intensity performance.

Fatigue strength testing can be carried out in a tool-automated way, or it can be manually programmed for testing, of which the latter accounts for a larger proportion.

Under normal circumstances, the maximum number of concurrent users that the server can normally and stably respond to requests is used to perform a fatigue test for a certain period of time to obtain transaction execution index data and system resource monitoring data. If an error occurs and the test cannot be successfully executed, the test indicators should be adjusted in time, such as reducing the number of users and shortening the test cycle. In another case, the fatigue test is to evaluate the performance of the current system, using the number of concurrent users under normal business conditions of the system as the basis for a fatigue test for a certain period of time.

Big data volume tests can be divided into two types: independent large data volume tests for certain system storage, transmission, statistics, and query services; and stress performance testing, load performance testing, A comprehensive data volume testing program combined with fatigue performance testing. The key to large-data-volume testing is the preparation of test data, and you can rely on tools to prepare test data.

Speed test is mainly to manually measure the speed for the key business with speed requirements. The average value can be calculated on the basis of multiple tests, and it can be compared and analyzed with the response time measured by the tool.

Network side

The focus of the application on the network performance test is to use mature and advanced automation technology for network application performance monitoring, network application performance analysis and network prediction.

Network application performance analysis

The purpose of network application performance analysis is to accurately show how changes in network bandwidth, latency, load, and TCP ports affect user response time. Using network application performance analysis tools, such as ApplicationExpert, can find application bottlenecks. We can know the application behavior that occurs at each stage when the application is running on the network, and analyze application problems at the application thread level. Many problems can be solved: Does the client run unnecessary requests to the database server? When the server receives a query from the client, does the application server spend unacceptable time contacting the database server? Predict the response time of the application before it is put into production; use ApplicationExpert to adjust the performance of the application on the WAN; ApplicationExpert allows you to quickly and easily simulate the performance of the application. According to the response time of the end user in different network configuration environments, users can follow their own conditions Decide the network environment in which the application will be put into production.

Network application performance monitoring

After the system is commissioned, it is necessary to know in time and accurately what is happening on the network; what applications are running and how to run; how many PCs are accessing the LAN or WAN: Which applications cause system bottlenecks or resource competition? At this time, network application performance monitoring and network resource management are critical to the normal and stable operation of the system. Using network application performance monitoring tools, you can achieve twice the result with half the effort. In this regard, the tool we can provide is NetworkVantage. In layman's terms, it is mainly used to analyze the performance of key applications and locate the source of the problem on the client, server, application or network. In most cases, users are more concerned about which applications take up a lot of bandwidth and which users generate the largest network traffic. This tool can also meet the requirements.

Network prediction

Considering the future expansion of the system, it is very important to predict the impact of changes in network traffic and changes in network structure on user systems. Make predictions based on planning data and provide network performance prediction data in a timely manner. We use the network predictive analysis capacity planning tool PREDICTOR to: set service levels, complete daily network capacity planning, offline test network, network failure and capacity limit analysis, complete daily fault diagnosis, predict network equipment migration and network equipment upgrades to the entire network Impact.

Get the network topology structure from the network management software, and obtain the flow information from the existing flow monitoring software (if there is no such software, the flow data can be manually generated), so that the basic structure of the existing network can be obtained. Based on the basic structure, reports and graphs can be generated according to changes in network structure and changes in network traffic to illustrate how these changes affect network performance. PREDICTOR provides the following information: According to the predicted results, it helps users to upgrade the network in time to avoid system performance degradation caused by key equipment exceeding the utilization threshold; which network equipment needs to be upgraded, which can reduce network delays and avoid network bottlenecks; Necessary network upgrades.

Server

For testing the performance of the application on the server, you can use tool monitoring, or you can use the monitoring commands of the system itself. For example, in Tuxedo, you can use the Top command to monitor resource usage . The purpose of the implementation test is to achieve a comprehensive monitoring of the server equipment, server operating system, database system, and application performance on the server. The test principle is as shown in the figure below.

UNIX resource monitoring indicators and descriptions

Monitoring indicators description

The average number of synchronization processes in the last 60 seconds in the normal state of the load average system

Conflict rate The number of conflicts per second monitored on the Ethernet

Process/thread exchange rate Process and thread exchanges per second

CPU utilization CPU occupancy rate ( %)

Disk exchange rate Disk exchange rate

Receive packet error rate The number of errors per second when receiving Ethernet data packets

Packet input rate Input per second Number of Ethernet packets

Interrupt rate The number of interrupts processed by the CPU per second

Output packet error rate The number of errors per second when sending Ethernet packets

Packets Input rate The number of Ethernet packets output per second

The rate of reading memory pages per second in the physical memory The number of memory pages read per second in the physical memory

The rate of writing out memory pages per second from the physical memory The number of memory pages written to the page file in the memory

The number of memory pages deleted from physical memory

The exchange rate of memory pages written to memory pages and slaves per second The number of read pages in the physical memory

The number of processes that enter the exchange rate exchange area.

The number of processes that process out of the exchange rate exchange area

System CPU Utilization System CPU Occupancy Rate (%)

User CPU Utilization CPU Occupancy Rate in User Mode (%)

Disk blocking The number of bytes per second blocked by disk

Purpose

The purpose is to verify whether the software system can reach the performance indicators proposed by the user, and to find the performance bottlenecks in the software system, optimize the software, and finally achieve the purpose of optimizing the system.

Include the following aspects

1. To evaluate the capabilities of the system, the load and response time data obtained in the test can be used to verify the capabilities of the planned model and help make decisions.

2. Identify weaknesses in the system: The controlled load can be increased to an extreme level and break through it, thereby repairing bottlenecks or weaknesses in the system.

3. System tuning: Repeatedly run the test to verify that the activities of tuning the system get the expected results, thereby improving performance.

Detect problems in the software: Long-term test execution can cause the program to fail due to memory leaks, revealing hidden problems or conflicts in the program.

4. Verify the stability (resilience) reliability (reliability): Performing a test for a certain period of time under a production load is the only way to assess whether the system stability and reliability meet the requirements.

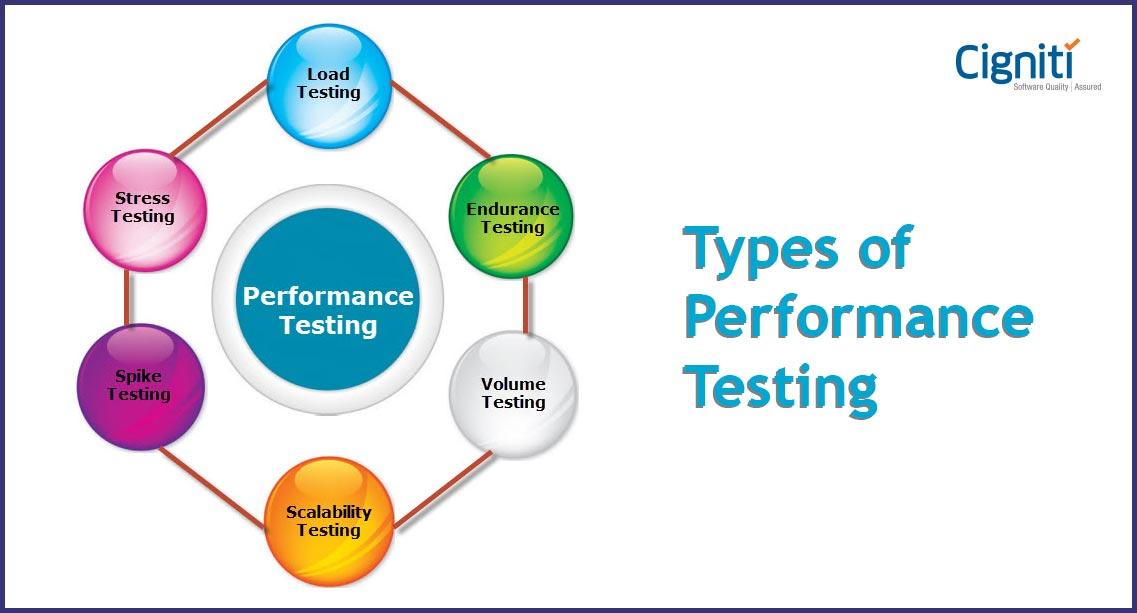

Type

The performance test types include load test, strength test, capacity test, etc.

Load testing (LoadTesting): Load testing is mainly to test whether the software system meets the goals of the requirements document design, such as the maximum number of concurrent users supported by the software in a certain period of time, the error rate of software requests, etc. , The main test is the performance of the software system.

Stress Testing: Stress testing is also stress testing. Stress testing is mainly to test whether the hardware system meets the performance goals of the requirements document design, such as the system's cpu utilization and memory in a certain period of time. Utilization rate, disk I/O throughput rate, network throughput, etc. The biggest difference between stress test and load test is the test purpose.

Volume Testing: Determine the maximum capacity of the system, such as the maximum number of users of the system, the maximum storage capacity, and the most processed data flow.

The performance test includes the following test types:

Benchmark test-compares the performance of new or unknown test objects with known reference standards (such as existing software or evaluation standards).

Contention test:-Verify whether the test object can accept multiple protagonists' requests for the same resources (data records, memory, etc.).

Performance configuration-to verify the acceptability of the performance behavior of the test subject when using different configurations under the condition that the operating conditions remain unchanged.

Load test-to verify the acceptability of the performance behavior of the test object under different operating conditions (such as different number of users, number of transactions, etc.) while keeping the configuration unchanged.

Strength test-to verify the acceptability of the test subject's performance behavior under abnormal or extreme conditions (such as reduced resources or excessive number of users).

Capacity test-to verify the maximum number of software programs used by test users at the same time.

Performance evaluation is usually performed in collaboration with user representatives and in a multi-level method.

The first level of performance analysis involves the evaluation of the results of a single protagonist/use case instance and the comparison of the results of multiple test executions. For example, when there is no other activity on the test object, record the performance behavior of a single actor executing a single use case, and compare the results with several other test executions of the same actor/use case. The first level of analysis helps to identify trends that can indicate contention in system resources that will affect the validity of conclusions drawn from other performance test results.

The second level of analysis examines the summary statistics and actual data values executed by a specific protagonist/use case, as well as the performance behavior of the test object. Summary statistics include the standard deviation and percentile distribution of response time. This information shows how the system's response has changed, just as each protagonist sees it.

The third level of analysis helps to understand the causes and weights of performance problems. This detailed analysis uses low-level data and uses statistical methods to help testers draw correct conclusions from the data. Detailed analysis provides objective and quantitative criteria for decision-making, but it takes a long time and requires a basic understanding of statistics.

When differences in performance and behavior do exist, or are caused by some random events related to the collection of test data, detailed analysis uses the concept of statistical weighting to help understand. That is to say, at the basic level, any event is random. Statistical testing determines whether there are systematic differences that cannot be explained by random events.

Indicators

Performance testing mainly uses automated testing tools to simulate a variety of normal, peak and abnormal load conditions to test various performance indicators of the system. Load testing and stress testing are both performance testing, and the two can be combined. Through load testing, determine the performance of the system under various workloads. The goal is to test the changes in various performance indicators of the system when the load gradually increases. Stress testing is a test to obtain the maximum service level that the system can provide by determining the bottleneck or unacceptable performance points of a system.

In actual work, we often test two types of software: bs and cs. What are the performance indicators of these two aspects?

The general indicators that Bs structure programs generally pay attention to are as follows (abbreviated):

Web server indicators:

*AvgRps: average number of responses per second= Total request time/seconds;

*Avgtimetolastbyteperterstion(mstes): The average number of iterations of business scripts per second. Some people will confuse the two;

*SuccessfulRounds: Successful requests ;

*FailedRounds: failed requests;

*SuccessfulHits: successful clicks;

*FailedHits: failed clicks;

*HitsPerSecond: the number of clicks per second;

*SuccessfulHitsPerSecond: the number of successful clicks per second;

*FailedHitsPerSecond: the number of failed clicks per second;

*AttemptedConnections: the number of attempts to connect;

CS structure program, because the general software background is usually a database, we pay more attention to the test indicators of the database:

*User0Connections: the number of user connections, That is, the number of database connections;

*Numberofdeadlocks: database deadlock;

*BufferCachehit: database cache hits

Of course, in practice, we still Will observe the memory, CPU, and system resource usage under the multi-user test. These indicators are actually one of the extended performance tests: competition tests. What is a competitive test? The software competes to use various resources (data records, memory, etc.), depending on its ability to compete for resources with other related systems.

We know that software architecture restricts the choice of testing strategies and tools in actual testing. How to choose a performance test strategy is what we need to understand in actual work. General software can be divided into several types according to the system architecture:

c/s

client/Server client/server architecture

Based on the client/server three Layer architecture

Client/server-based distributed architecture

b/s

Browser/Web server-based three-tier architecture

Three-tier architecture based on middleware application serverl

Multi-tier architecture based on Web server and middlewarel

Steps

In the implementation of the system architecture, developers may choose different implementation methods, resulting in complex and complicated actual situations. It is impossible for us to explain each technology in detail. This is just to introduce a method to provide you with how to choose a test strategy, so as to help analyze the performance indicators of different parts of the software, and then analyze the performance indicators and performance bottlenecks of the overall architecture.

Because of the differences between projects and projects, the selected metrics and evaluation methods are also different. But there are still some general steps to help us complete a performance test project. The steps are as follows

1. Setting goals and analysis system

2. Choose the method of test measurement

3. Related technologies and tools for learning

4. Develop evaluation criteria

5. Design test cases

6. Run test cases

7. Analyze test results

Develop goals and analyze system

The first step in every performance test plan is to formulate goals and analyze system composition. Only by clarifying the goal and understanding the structure of the system can the scope of the test be clarified and know what kind of technology to master in the test.

Goal:

1. Determine customer needs and expectations

2. Actual business requirements

3. System requirements

System composition

System composition includes several meanings: system category, system composition, system function, etc. Understanding the nature of these contents actually helps us to clarify the scope of the test and choose the appropriate test method to perform the test.

System category: distinguishing the system category is the prerequisite for what kind of technology we have mastered, and the performance test can be successful if we master the corresponding technology. For example: the system category is a bs structure, and you need to master http protocol, java, html and other technologies. Or the cs structure, you may need to understand the operating system, winsock, com, etc. Therefore, the classification of the system is very important for us.

System composition: Hardware settings and operating system settings are the constraints of performance testing. Generally, performance testing uses testing tools to imitate a large number of actual user operations, and the system operates under overload conditions. Different system composition performance tests will get different results.

System function: System function refers to the different subsystems provided by the system, the official document subsystem in the office management system, the conference subsystem, etc. The system function is the link to be simulated in the performance test. It is necessary to understand these .

Choose the method of test measurement

After the first step, you will have a clear understanding of the system. Next, we will focus on software metrics and collect system-related data.

Related aspects of measurement:

*Develop specifications

*Develop relevant processes, roles, responsibilities

*Develop improvement strategies

p>*Develop results comparison standards

Related technologies and tools learned

Performance testing is to use tools to simulate a large number of user operations and increase the load on the system. Therefore, a certain knowledge of tools is required to perform performance testing. Everyone knows that performance testing tools generally record user operations through winsock, http and other protocols. The protocol selection is based on the implementation of the software system architecture (web generally chooses http protocol, cs chooses winsock protocol), and different performance testing tools have different scripting languages. For example, the vu script in rationalrobot is implemented in c-like language.

To carry out performance testing, various performance testing tools need to be evaluated, because each performance testing tool has its own characteristics. Only after tool evaluation, can performance testing tools that conform to the existing software architecture be selected. After determining the test tools, you need to organize testers to learn about the tools and train related technologies.

Develop evaluation criteria

The purpose of any test is to ensure that the software meets the pre-specified goals and requirements. Performance testing is no exception. Therefore, a set of standards must be developed.

Generally, there are four model technologies for performance testing:

*Linear projection: use a large amount of past, extended or future data to form a scatter diagram, use this The chart is constantly compared with the current state of the system.

*Analysis model: Use queuing theory formulas and algorithms to predict response time, and use data describing workloads to correlate with the nature of the system

*Imitation: imitate actual users’ usage methods to test you System

*Benchmark: Define the test and your initial test as a standard, and use it to compare with all subsequent test results

Design test cases

Designing test cases is based on understanding the software business process. The principle of designing test cases is to provide the most test information with the least impact. The goal of designing test cases is to include as many test elements as possible at a time. These test cases must be achievable by the test tool, and different test scenarios will test different functions. Because performance testing is different from the usual test cases, it is possible to find the performance bottleneck of the software by designing the performance test cases as complex as possible.

Run test cases

Run test cases through performance testing tools. The test results obtained from the performance test under the same environment are inaccurate, so when running these test cases, you need to use different test environments and different machine configurations to run.

Analyze the test results

After running the test case, collect relevant information, perform statistical analysis of the data, and find the performance bottleneck. By eliminating errors and other factors, the test results are close to the real situation. Different architectures have different methods of analyzing test results. For the bs structure, we will analyze the network bandwidth and the impact of traffic on user operation response, while for the cs structure, we may be more concerned about the impact of the overall system configuration on user operations.

Methods

For enterprise applications, there are many methods for performance testing, some of which are more difficult to implement than others. The type of performance test to be performed depends on the desired result. For example, for reproducibility, benchmarking is the best method. To test the upper limit of the system from the perspective of current user load, capacity planning testing should be used. This article will introduce several methods for setting up and running performance tests, and discuss the differences between these methods.

Without reasonable planning, performance testing of J2EE applications will be a daunting and somewhat confusing task. Because for any software development process, it is necessary to collect requirements, understand business needs, and design a formal schedule before actual testing. The requirements for performance testing are driven by business needs and clarified by a set of use cases. These use cases can be based on historical data (for example, the load pattern of the server for a week) or predicted approximations. After you figure out what needs to be tested, you need to know how to test.

In the early stages of the development phase, benchmark testing should be used to determine whether performance regressions occur in the application. Benchmarking can collect repeatable results in a relatively short period of time. The best way to benchmark is to change one and only one parameter per test. For example, if you want to know whether increasing the JVM memory will affect the performance of the application, you can increase the JVM memory gradually (for example, from 1024MB to 1224MB, then 1524MB, and finally 2024MB), collect the results and environmental data at each stage, and record Information, and then go to the next stage. In this way, there are traces to follow when analyzing the test results. In the next section, I will introduce what a benchmark is and the best parameters for running a benchmark.

In the late development phase, bugs in the application have been resolved. After the application reaches a stable state, more complex tests can be run to determine the performance of the system under different load patterns. These tests are called capacity planning tests, penetration tests (soaktest), peak-rest tests (peak-resttest), and they aim to test scenarios close to the real world by testing the reliability, robustness, and scalability of the application.对于下面的描述应该从抽象的意义上理解,因为每个应用程序的使用模式都是不同的。例如,容量规划测试通常都使用较缓慢的ramp-up(下文有定义),但是如果应用程序在一天之中的某个时段中有快速突发的流量,那么自然应该修改测试以反映这种情况。但是,要记住,因为更改了测试参数(比如ramp-up周期或用户的考虑时间(think-time)),测试的结果肯定也会改变。一个不错的方法是,运行一系列的基准测试,确立一个已知的可控环境,然后再对变化进行比较。

基准测试

基准测试的关键是要获得一致的、可再现的结果。可再现的结果有两个好处:减少重新运行测试的次数;对测试的产品和产生的数字更为确信。使用的性能测试工具可能会对测试结果产生很大影响。假定测试的两个指标是服务器的响应时间和吞吐量,它们会受到服务器上的负载的影响。服务器上的负载受两个因素影响:同时与服务器通信的连接(或虚拟用户)的数目,以及每个虚拟用户请求之间的考虑时间的长短。很明显,与服务器通信的用户越多,负载就越大。同样,请求之间的考虑时间越短,负载也越大。这两个因素的不同组合会产生不同的服务器负载等级。记住,随着服务器上负载的增加,吞吐量会不断攀升,直到到达一个点。

注意,吞吐量以稳定的速度增长,然后在某一个点上稳定下来。

在某一点上,执行队列开始增长,因为服务器上所有的线程都已投入使用,传入的请求不再被立即处理,而是放入队列中,当线程空闲时再处理。

注意,最初的一段时间,执行队列的长度为零,然后就开始以稳定的速度增长。这是因为系统中的负载在稳定增长,虽然最初系统有足够的空闲线程去处理增加的负载,最终它还是不能承受,而必须将其排入队列。

当系统达到饱和点,服务器吞吐量保持稳定后,就达到了给定条件下的系统上限。但是,随着服务器负载的继续增长,系统的响应时间也随之延长,虽然吞吐量保持稳定。

注意,在执行队列(图2)开始增长的同时,响应时间也开始以递增的速度增长。这是因为请求不能被及时处理。

为了获得真正可再现的结果,应该将系统置于相同的高负载下。为此,与服务器通信的虚拟用户应该将请求之间的考虑时间设为零。这样服务器会立即超载,并开始构建执行队列。如果请求(虚拟用户)数保持一致,基准测试的结果应该会非常精确,完全可以再现。

您可能要问的一个问题是:“如何度量结果?”对于一次给定的测试,应该取响应时间和吞吐量的平均值。精确地获得这些值的唯一方法是一次加载所有的用户,然后在预定的时间段内持续运行。这称为“flat”测试。

与此相对应的是“ramp-up”测试。

ramp-up测试中的用户是交错上升的(每几秒增加一些新用户)。 ramp-up测试不能产生精确和可重现的平均值,这是因为由于用户的增加是每次一部分,系统的负载在不断地变化。因此,flat运行是获得基准测试数据的理想模式。

这不是在贬低ramp-up测试的价值。实际上,ramp-up测试对找出以后要运行的flat测试的范围非常有用。 ramp-up测试的优点是,可以看出随着系统负载的改变,测量值是如何改变的。然后可以据此选择以后要运行的flat测试的范围。

Flat测试的问题是系统会遇到“波动”效果。

注意波动的出现,吞吐量不再是平滑的。

这在系统的各个方面都有所体现,包括CPU的使用量。

注意,每隔一段时间就会出现一个波形。 CPU使用量不再是平滑的,而是有了像吞吐量图那样的尖峰。

此外,执行队列也承受着不稳定的负载,因此可以看到,随着系统负载的增加和减少,执行队列也在增长和缩减。

注意,每隔一段时间就会出现一个波形。执行队列曲线与上面的CPU使用量图非常相似。

最后,系统中事务的响应时间也遵循着这个波动模式。

注意,每隔一段时间就会出现一个波形。事务的响应时间也与上面的图类似,只不过其效果随着时间的推移逐渐减弱。

当测试中所有的用户都同时执行几乎相同的操作时,就会发生这种现象。这将会产生非常不可靠和不精确的结果,所以必须采取一些措施防止这种情况的出现。有两种方法可以从这种类型的结果中获得精确的测量值。如果测试可以运行相当长的时间(有时是几个小时,取决于用户的操作持续的时间),最后由于随机事件的本性使然,服务器的吞吐量会被“拉平”。或者,可以只选取波形中两个平息点之间的测量值。该方法的缺点是可以捕获数据的时间非常短。

性能规划测试

对于性能规划类型的测试来说,其目标是找出,在特定的环境下,给定应用程序的性能可以达到何种程度。此时可重现性就不如在基准测试中那么重要了,因为测试中通常都会有随机因子。引入随机因子的目的是为了尽量模拟具有真实用户负载的现实世界应用程序。通常,具体的目标是找出系统在特定的服务器响应时间下支持的当前用户的最大数。例如,您可能想知道:如果要以5秒或更少的响应时间支持8,000个当前用户,需要多少个服务器?要回答这个问题,需要知道系统的更多信息。

要确定系统的容量,需要考虑几个因素。通常,服务器的用户总数非常大(以十万计),但是实际上,这个数字并不能说明什么。真正需要知道的是,这些用户中有多少是并发与服务器通信的。其次要知道的是,每个用户的“考虑时间”即请求间时间是多少。这非常重要,因为考虑时间越短,系统所能支持的并发用户越少。例如,如果用户的考虑时间是1秒,那么系统可能只能支持数百个这样的并发用户。但是,如果用户的考虑时间是30秒,那么系统则可能支持数万个这样的并发用户(假定硬件和应用程序都是相同的)。在现实世界中,通常难以确定用户的确切考虑时间。还要注意,在现实世界中,用户不会精确地按照间隔时间发出请求。

于是就引入了随机性。如果知道普通用户的考虑时间是5秒,误差为20%,那么在设计负载测试时,就要确保请求间的时间为5×(1+/-20%)秒。此外,可以利用“调步”的理念向负载场景中引入更多的随机性。它是这样的:在一个虚拟用户完成一整套的请求后,该用户暂停一个设定的时间段,或者一个小的随机时间段(例如,2×(1+/-25%)秒),然后再继续执行下一套请求。将这两种随机化方法运用到测试中,可以提供更接近于现实世界的场景。

进行实际的容量规划测试了。接下来的问题是:如何加载用户以模拟负载状态?最好的方法是模拟高峰时间用户与服务器通信的状况。这种用户负载状态是在一段时间内逐步达到的吗?如果是,应该使用ramp-up类型的测试,每隔几秒增加x个用户。或者,所有用户是在一个非常短的时间内同时与系统通信?如果是这样,就应该使用flat类型的测试,将所有的用户同时加载到服务器。两种不同类型的测试会产生没有可比性的不同测试。例如,如果进行ramp-up类型的测试,系统可以以4秒或更短的响应时间支持5,000个用户。而执行flat测试,您会发现,对于5,000个用户,系统的平均响应时间要大于4秒。这是由于ramp-up测试固有的不准确性使其不能显示系统可以支持的并发用户的精确数字。以门户应用程序为例,随着门户规模的扩大和集群规模的扩大,这种不确定性就会随之显现。

这不是说不应该使用ramp-up测试。对于系统负载在一段比较长的时间内缓慢增加的情况,ramp-up测试效果还是不错的。这是因为系统能够随着时间不断调整。如果使用快速ramp-up测试,系统就会滞后,从而报告一个较相同用户负载的flat测试低的响应时间。那么,什么是确定容量的最好方法?结合两种负载类型的优点,并运行一系列的测试,就会产生最好的结果。例如,首先使用ramp-up测试确定系统可以支持的用户范围。确定了范围之后,以该范围内不同的并发用户负载进行一系列的flat测试,更精确地确定系统的容量。

渗入测试

渗入测试是一种比较简单的性能测试。渗入测试所需时间较长,它使用固定数目的并发用户测试系统的总体健壮性。这些测试将会通过内存泄漏、增加的垃圾收集(GC)或系统的其他问题,显示因长时间运行而出现的任何性能降低。测试运行的时间越久,您对系统就越了解。运行两次测试是一个好主意——一次使用较低的用户负载(要在系统容量之下,以便不会出现执行队列),一次使用较高的负载(以便出现积极的执行队列)。

测试应该运行几天的时间,以便真正了解应用程序的长期健康状况。要确保测试的应用程序尽可能接近现实世界的情况,用户场景也要逼真(虚拟用户通过应用程序导航的方式要与现实世界一致),从而测试应用程序的全部特性。确保运行了所有必需的监控工具,以便精确地监测并跟踪问题。

峰谷测试

峰谷测试兼有容量规划ramp-up类型测试和渗入测试的特征。其目标是确定从高负载(例如系统高峰时间的负载)恢复、转为几乎空闲、然后再攀升到高负载、再降低的能力。

实现这种测试的最好方法就是,进行一系列的快速ramp-up测试,继之以一段时间的平稳状态(取决于业务需求),然后急剧降低负载,此时可以令系统平息一下,然后再进行快速的ramp-up;反复重复这个过程。这样可以确定以下事项:第二次高峰是否重现第一次的峰值?其后的每次高峰是等于还是大于第一次的峰值?在测试过程中,系统是否显示了内存或GC性能降低的有关迹象?测试运行(不停地重复“峰值/空闲”周期)的时间越长,您对系统的长期健康状况就越了解。

原则

1)情况许可时,应使用几种测试工具或手段分别独立进行测试,并将结果相互印证,避免单一工具或测试手段自身缺陷影响结果的准确性;

2)对于不同的系统,性能关注点是有所区别的,应该具体问题具体分析;

3)查找瓶颈的过程应由易到难逐步排查:

服务器硬件瓶颈及网络瓶颈(局域网环境下可以不考虑网络因素)

应用服务器及中间件操作系统瓶颈(数据库、WEB服务器等参数配置)

应用业务瓶颈(SQL语句、数据库设计、业务逻辑、算法、数据等)

4)性能调优过程中不宜对系统的各种参数进行随意的改动,应该以用户配置手册中相关参数设置为基础,逐步根据实际现场环境进行优化,一次只对某个领域进行性能调优(例如对CPU的使用情况进行分析),并且每次只改动一个设置,避免相关因素互相干扰;

5)调优过程中应仔细进行记录,保留每一步的操作内容及结果,以便比较分析;

6)性能调优是一个经验性的工作,需要多思考、分析、交流和积累;

7)了解“有限的资源,无限的需求”;

8)尽可能在开始前明确调优工作的终止标准。

工具

自动化测试工具介绍LR篇

HPLoadRunner是一种预测系统行为和性能的负载测试工具。通过以模拟上千万用户实施并发负载及实时性能监测的方式来确认和查找问题,LoadRunner能够对整个企业架构进行测试。通过使用LoadRunner,企业能最大限度地缩短测试时间,优化性能和加速应用系统的发布周期。

企业的网络应用环境都必须支持大量用户,网络体系架构中含各类应用环境且由不同供应商提供软件和硬件产品。难以预知的用户负载和愈来愈复杂的应用环境使公司时时担心会发生用户响应速度过慢,系统崩溃等问题。这些都不可避免地导致公司收益的损失。 LoadRunner能让企业保护自己的收入来源,无需购置额外硬件而最大限度地利用现有的IT资源,并确保终端用户在应用系统的各个环节中对其测试应用的质量,可靠性和可扩展性都有良好的评价。

轻松创建虚拟用户

使用LoadRunner的VirtualUserGenerator,您能很简便地创立起系统负载。该引擎能够生成虚拟用户,以虚拟用户的方式模拟真实用户的业务操作行为。它先记录下业务流程(如下订单或机票预定),然后将其转化为测试脚本。利用虚拟用户,您可以在Windows,UNIX或Linux机器上同时产生成千上万个用户访问。所以LoadRunner能极大的减少负载测试所需的硬件和人力资源。另外,LoadRunner的TurboLoad专利技术能。

提供很高的适应性。 TurboLoad使您可以产生每天几十万名在线用户和数以百万计的点击数的负载。

用VirtualUserGenerator建立测试脚本后,您可以对其进行参数化操作,这一操作能让您利用几套不同的实际发生数据来测试您的应用程序,从而反映出本系统的负载能力。以一个订单输入过程为例,参数化操作可将记录中的固定数据,如订单号和客户名称,由可变值来代替。在这些变量内随意输入可能的订单号和客户名,来匹配多个实际用户的操作行为。

LoadRunner通过它的DataWizard来自动实现其测试数据的参数化。 DataWizard直接连于数据库服务器,从中您可以获取所需的数据(如定单号和用户名)并直接将其输入到测试脚本。这样避免了人工处理数据的需要,DataWizard为您节省了大量的时间。

为了进一步确定您的Virtualuser能够模拟真实用户,您可利用LoadRunner控制某些行为特性。例如,只需要点击一下鼠标,您就能轻易控制交易的数量,交易频率,用户的思考时间和连接速度等。

创建真实的负载

Virtualusers建立起后,您需要设定您的负载方案,业务流程组合和虚拟用户数量。用LoadRunner的Controller,您能很快组织起多用户的测试方案。 Controller的Rendezvous功能提供一个互动的环境,在其中您既能建立起持续且循环的负载,又能管理和驱动负载测试方案。

而且,您可以利用它的日程计划服务来定义用户在什么时候访问系统以产生负载。这样,您就能将测试过程自动化。同样您还可以用Controller来限定您的负载方案,在这个方案中所有的用户同时执行一个动作---如登陆到一个库存应用程序——---来模拟峰值负载的情况。另外,您还能监测系统架构中各个组件的性能——---包括服务器,数据库,网络设备等——---来帮助客户决定系统的配置。

LoadRunner通过它的AutoLoad技术,为您提供更多的测试灵活性。使用AutoLoad,您可以根据用户人数事先设定测试目标,优化测试流程。例如,您的目标可以是确定您的应用系统承受的每秒点击数或每秒的交易量。

最大化投资回报

所有MercuryInteractive的产品和服务都是集成设计的,能完全相容地一起运作。由于它们具有相同的核心技术,来自于LoadRunner和ActiveTestTM的测试脚本,在MercuryInteractive的负载测试服务项目中,可以被重复用于性能监测。借助MercuryInteractive的监测功能--TopazTM和ActiveWatchTM,测试脚本可重复使用从而平衡投资收益。更重要的是,您能为测试的前期部署和生产系统的监测提供一个完整的应用性能管理解决方案。

支持无线应用协议

随着无线设备数量和种类的增多,您的测试计划需要同时满足传统的基于浏览器的用户和无线互联网设备,如手机和PDA。 LoadRunner支持2项最广泛使用的协议:WAP和I-mode。此外,通过负载测试系统整体架构,LoadRunner能让您只需要通过记录一次脚本,就可完全检测上述这些无线互联网系统。

支持MediaStream应用

LoadRunner还能支持MediaStream应用。为了保证终端用户得到良好的操作体验和高质量MediaStream,您需要检测您的MediaStream应用程序。使用LoadRunner,您可以记录和重放任何流行的多媒体数据流格式来诊断系统的性能问题,查找原由,分析数据的质量。

完整的企业应用环境的支持。

LoadRunner支持广泛的协议,可以测试各种IT基础架构。

性能测试工具PerformanceRunner

PerformanceRunner(简称PR)是性能测试软件,通过模拟高并发的客户端,通过协议和报文产生并发压力给服务器,测试整个系统的负载和压力承受能力,实现压力测试、性能测试、配置测试、峰值测试等。

功能如下:

●录制测试脚本

PR通过兼听应用程序的协议和端口,录制应用程序的协议和报文,创建测试脚本。 PR采用java作为标准测试脚本,支持参数化、检查点等功能。

●关联与session

对于应用程序,特别是B/S架构程序中的session,通过“关联”来实现。用户只需要点击“关联”的按钮,PR会自动扫描测试脚本,设置关联,实现有session的测试。

●集合点

PR支持集合点,通过函数可以设置集合点。设置集合点能够保证在一个时间点上的并发压力达到预期的指标,使性能并发更真实可信。

●产生并发压力

性能脚本创建之后,通过创建项目,设置压力模型,就可以产生压力。 PR能够在单台机器上产生多达5000个并发的压力。

●应用场景支持

通过设置多项目脚本的压力曲线,可以实现应用场景测试。

●执行监控

在启动性能测试之后,系统会按照设定的场景产生压力。在执行过程中,需要观察脚本执行的情况,被测试系统的性能指标情况。 PR通过执行监控来查看这些信息。

●性能分析报表

一次性能测试执行完成,会创建各种性能分析报表,包括cpu相关、吞吐率、并发数等。

系统要求:windows(32位/64位)2000/xp/vista/2003/7/2008

问题

本文介绍了进行性能测试的几种方法。取决于业务需求、开发周期和应用程序的生命周期,对于特定的企业,某些测试会比其他的更适合。但是,对于任何情况,在决定进行某一种测试前,都应该问自己一些基本问题。这些问题的答案将会决定哪种测试方法是最好的。

这些问题包括:

结果的可重复性需要有多高?

测试需要运行和重新运行几次?

您处于开发周期的哪个阶段?

您的业务需求是什么?

您的用户需求是什么?

您希望生产中的系统在维护停机时间中可以持续多久?

在一个正常的业务日,预期的用户负载是多少?

将这些问题的答案与上述性能测试类型相对照,应该就可以制定出测试应用程序的总体性能的完美计划。

性能测试是为描述测试对象与性能相关的特征并对其进行评价,而实施和执行的一类测试,如描述和评价计时配置文件、执行流、响应时间以及操作的可靠性和限制等特征。不同类型的性能测试侧重于不同的测试目标,这些性能测试的实施贯穿于整个软件开发生命周期(SoftwareDevelopmentLifeCycle,SDLC)。起初,在构架迭代中,性能测试侧重于确定和消除与构架有关的性能瓶颈。在构建迭代中还将实施和执行其他类型的性能测试,以调整软件和环境(优化响应时间和资源),并核实应用程序和系统是否能够处理高负载和高强度的情况,如有大量事务、客户机和/或数据的情况。